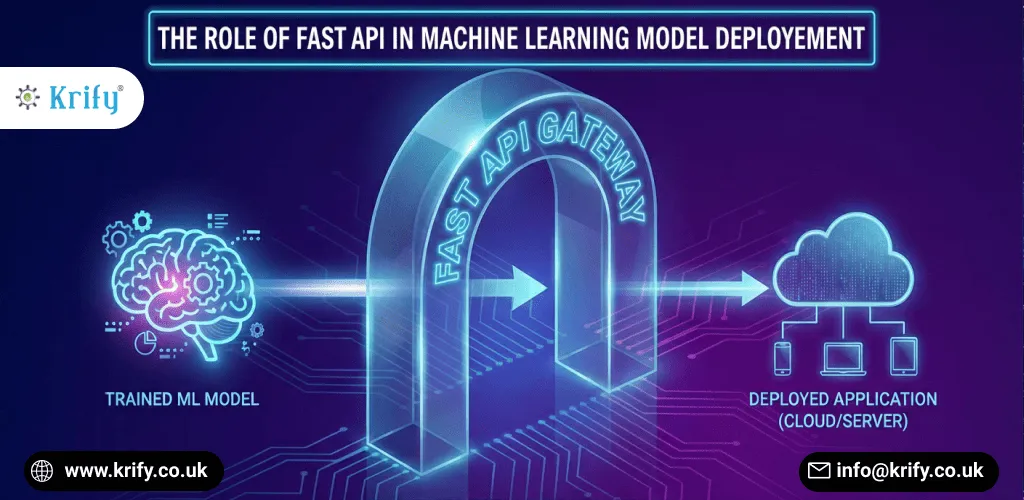

Deploying a machine learning model is often harder than building it. Many teams discover this the moment a trained model needs to move from a notebook into a real product. That’s exactly where FastAPI Deployment in machine learning starts to matter. FastAPI has become a popular choice for serving machine learning models because it balances performance, simplicity, and flexibility. At Krify, we’ve seen teams struggle with slow APIs and complex setups, only to find that FastAPI removes much of that friction. Moreover, it helps data teams and engineering teams work together more smoothly.

Machine learning doesn’t live in isolation. Models need to integrate with applications, dashboards, and other systems. Therefore, the framework handling deployment plays a critical role in overall success.

FastAPI Deployment in Real ML Workflows

In real projects, machine learning models are constantly updated. New data arrives, models are retrained, and predictions need to stay reliable. FastAPI fits naturally into this workflow.

For example, data scientists can expose model predictions as APIs without worrying about heavy configuration. In addition, backend developers appreciate FastAPI’s clean structure and predictable behavior. As a result, teams move faster without sacrificing clarity.

Moreover, FastAPI doesn’t hide what’s happening behind the scenes. That transparency helps teams debug and optimize deployments more confidently.

Why Speed Matters in Model Serving

Latency is critical for many ML use cases. Whether it’s fraud detection, recommendations, or real-time analytics, slow responses reduce value.

FastAPI is built on modern async capabilities. Therefore, it handles concurrent requests efficiently. In addition, it performs well even under load. Consequently, ML models served through FastAPI feel responsive rather than sluggish.

This performance advantage becomes more noticeable as usage scales.

Clean API Design Improves Collaboration

Machine learning teams often work alongside frontend and backend teams. Clear API contracts make that collaboration easier.

FastAPI automatically generates interactive documentation using OpenAPI standards. For instance, developers can test endpoints directly in the browser. Moreover, this reduces misunderstandings about input formats and response structures. As a result, integration issues decrease.

At Krify, we’ve noticed that clear APIs speed up adoption across teams.

Validation and Error Handling Built In

One common issue in ML deployment is invalid input. Bad data leads to bad predictions.

FastAPI uses Python type hints for validation. Therefore, inputs are checked before reaching the model. In addition, error messages are clear and structured. Consequently, debugging becomes simpler and safer.

This built-in validation protects models from unexpected behavior in production.

Easy Integration with ML Frameworks

FastAPI works well with popular ML libraries like TensorFlow, PyTorch, and scikit-learn. Models can be loaded once and reused across requests.

Moreover, FastAPI integrates smoothly with background tasks, databases, and message queues. Therefore, prediction pipelines stay flexible rather than rigid.

This adaptability is especially useful when models evolve over time.

Scalability for Growing Demand

A prototype may start with a few users. However, production systems rarely stay small.

FastAPI supports horizontal scaling through containers and cloud platforms. In addition, it works well with orchestration tools. As a result, ML services can grow without major redesigns.

At Krify, we’ve seen FastAPI-based deployments scale reliably as demand increases.

Why Teams Choose FastAPI for Production ML

FastAPI isn’t just a development convenience. It’s a production-ready solution.

Teams choose it because it’s fast, readable, and maintainable. Moreover, it supports modern DevOps practices. Therefore, ML deployment becomes part of a stable system rather than an afterthought.

That reliability makes FastAPI a practical long-term choice.

Conclusion

Machine learning models deliver value only when they’re deployed effectively. FastAPI Deployment simplifies that process by combining speed, clarity, and scalability. It helps teams move from experimentation to production with confidence. If you’re planning to deploy or scale machine learning models, Contact us to explore how FastAPI can support your ML strategy.

Email us -

Email us -